In the last two posts we saw how to call a constructor from another constructor using friend functions and how to separate the implementation from the header when C++ templates are used. In this post, I'm explaining how to do both, i.e., to use friend functions and templates with the implementation in a separate file from the header. One would expect that this is a pretty straightforward extensions, but, unfortunately, there are a few details that need to be carefully considered.

In the last two posts we saw how to call a constructor from another constructor using friend functions and how to separate the implementation from the header when C++ templates are used. In this post, I'm explaining how to do both, i.e., to use friend functions and templates with the implementation in a separate file from the header. One would expect that this is a pretty straightforward extensions, but, unfortunately, there are a few details that need to be carefully considered.Sunday 2 November 2014

Templated C++ classes with friend functions and implementation-header separation

In the last two posts we saw how to call a constructor from another constructor using friend functions and how to separate the implementation from the header when C++ templates are used. In this post, I'm explaining how to do both, i.e., to use friend functions and templates with the implementation in a separate file from the header. One would expect that this is a pretty straightforward extensions, but, unfortunately, there are a few details that need to be carefully considered.

In the last two posts we saw how to call a constructor from another constructor using friend functions and how to separate the implementation from the header when C++ templates are used. In this post, I'm explaining how to do both, i.e., to use friend functions and templates with the implementation in a separate file from the header. One would expect that this is a pretty straightforward extensions, but, unfortunately, there are a few details that need to be carefully considered.C++ Templates: Separate definition and implementation

Templates in C++ often force the developer to either merge the definition of the function prototypes and the implementation in a single header file (which is awkward), or to #include the implementation file in their application (i.e., do something like #include "MyClass.cpp", which is even more awkward!). The use of extern and export has been introduced in C++11, but here I'm going to present a neat way to deal with this peculiarity through an easy example.

Templates in C++ often force the developer to either merge the definition of the function prototypes and the implementation in a single header file (which is awkward), or to #include the implementation file in their application (i.e., do something like #include "MyClass.cpp", which is even more awkward!). The use of extern and export has been introduced in C++11, but here I'm going to present a neat way to deal with this peculiarity through an easy example.Saturday 1 November 2014

C++: calling a constructor from within a constructor

Constructors within constructors? This is business as usual for the Java programmer, but what about C++? Unfortunately, it turns out that that's not so straightforward a task... In this post we are going to work this around with a simple example: a class which can be used to read data from text files. The key in this approach answers to the name of friend function! A friend function is a function which (i) is not a member function of a class, but (ii) has access to the private fields of objects that are passed to it as arguments.

Constructors within constructors? This is business as usual for the Java programmer, but what about C++? Unfortunately, it turns out that that's not so straightforward a task... In this post we are going to work this around with a simple example: a class which can be used to read data from text files. The key in this approach answers to the name of friend function! A friend function is a function which (i) is not a member function of a class, but (ii) has access to the private fields of objects that are passed to it as arguments.Tuesday 21 October 2014

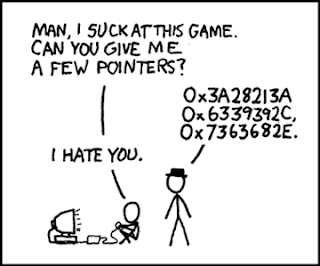

CUDA and pointers to pointers

Say you have a CUDA kernel that operates on a number of different arrays d_A1, d_A2, ..., d_An which are not stored in the device memory consecutively, i.e., there is not a single array d_A which stores the elements of d_A1, d_A2, ..., d_An in some particular order. If one needs to pass all these arrays to the kernel, the pointers to pointers do the job. In this post we'll see how to use pointers to pointers in CUDA C.

Monday 20 October 2014

High-performance matrix-vector multiplication in CUDA C

Here I present a custom kernel for matrix-vector multiplication written in CUDA C and some benchmarking results on a Tegra K1 (on a Jetson TK1 development board) and comparison to cuBLAS's function cublasSgemv. This is an open-source project which is hosted on github. This post comes, as I promised, as a sequel of an older post about matrix-vector multiplication in CUDA using shared memory. Further optimisation of the kernel is also possible (there are a few ideas), but for the time being I'm presenting some encouraging results...

Sunday 19 October 2014

Timing CUDA kernels

Are you up to benchmarking your CUDA application? Are you looking for the easiest possible way on earth to time your kernels? Then, you're at the right place because in this post we're going through some code snippets to help you out. The implementation I present today is inspired by MATLAB's tic-toc simple interface. You start the timer with a tic() and get the elapsed time with a toc(). This saves a lot of lines of code and keeps you code simpler and tidy.

Friday 17 October 2014

CUDA C - Tutorials and other resources

This is a collection of tutorials, blogs, articles and other resources for CUDA C that I hope you'll find useful. Feel free to contribute with a comment what you think can help people learn CUDA and optimise their code. I am planning to keep this post continuously updated, so stay tuned. Most of the links hosted here point to free resources.

This is a collection of tutorials, blogs, articles and other resources for CUDA C that I hope you'll find useful. Feel free to contribute with a comment what you think can help people learn CUDA and optimise their code. I am planning to keep this post continuously updated, so stay tuned. Most of the links hosted here point to free resources.Tuesday 14 October 2014

Matrix-vector multiplication using shared memory

Matrix-vector multiplications, as well as matrix-matrix ones are essential for any sort numeric computations. GPGPUs alllow massive parallelisation of such operations. This post is about doing matrix-vector multiplications using CUDA with shared memory; a type of on-chip memory that is much faster than the global memory of the device - actually, shared memory is as high as 100 times faster than global memory provided that there are no bank conflicts between the threads. Algebraic operations such as matrix-vector multiplications can benefit a lot from the use of shared memory as we will show in this post.

Matrix-vector multiplications, as well as matrix-matrix ones are essential for any sort numeric computations. GPGPUs alllow massive parallelisation of such operations. This post is about doing matrix-vector multiplications using CUDA with shared memory; a type of on-chip memory that is much faster than the global memory of the device - actually, shared memory is as high as 100 times faster than global memory provided that there are no bank conflicts between the threads. Algebraic operations such as matrix-vector multiplications can benefit a lot from the use of shared memory as we will show in this post.Monday 13 October 2014

Static allocation of __device__ vars

This is a brief post on how to allocate device memory statically using the __device__ keyword. We explain how one can statically allocate memory space on the device and initialize it as with standard C code. This memory is the available to the device but cannot be directly referenced from the host (unless cudaGetSymbolAddress is used as discussed in a previous post).

This is a brief post on how to allocate device memory statically using the __device__ keyword. We explain how one can statically allocate memory space on the device and initialize it as with standard C code. This memory is the available to the device but cannot be directly referenced from the host (unless cudaGetSymbolAddress is used as discussed in a previous post).Saturday 11 October 2014

Memories from CUDA - Pinned memory (III)

The main motivation for using pinned memory is to perform asynchronous transfers of data from the host to the device. This is accomplished using cudaMemcpyAsync and related functions. Additionally, certain performance benefits come with pinned (or page-locked) memory and additional performance benefit can be obtained by using write-combined memory in certain cases. In this post we give a few examples about how to allocate pinned memory and we investigate its features.

The main motivation for using pinned memory is to perform asynchronous transfers of data from the host to the device. This is accomplished using cudaMemcpyAsync and related functions. Additionally, certain performance benefits come with pinned (or page-locked) memory and additional performance benefit can be obtained by using write-combined memory in certain cases. In this post we give a few examples about how to allocate pinned memory and we investigate its features.Friday 10 October 2014

Memories from CUDA - Symbol Addresses (II)

In a previous post we gave a simple example of accessing constant memory in CUDA from inside a kernel function. What if we need to access it from the host (i.e., pass it as an argument to a kernel)? In this post we focus on how to use cudaGetSymbolAddress to get the address of a device variable (can be a __constant__ or a __device__). Nowadays, modern architectures support the keyword __managed__, but we're going to do things the old way...

Memories from CUDA - Constant memory (I)

This is a post about all of us who feel we can't memorize all these different types of CUDA memories. This is the first post from a series of upcoming posts on memory management in CUDA. Here, we present constant memory and we explain how it can be accessed from the the device through a step-by-step comprehensive example.

Thursday 9 October 2014

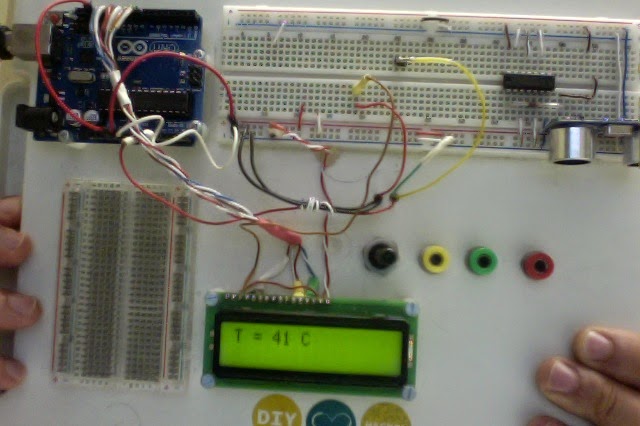

Jetson to Arduino over Serial

OK, so this is the plan: every time the temperature of my Jetson TK1 GPU reaches 60C or so, a siren starts howling, a blinding red light is blinking in the dark underground lab and it reflects on screens that output incomprehensible sequences of numbers and data, a robotic voice goes "evacuate the building," "this is an emergency" and other catchy phrases etc, etc..., but first I need to hook up Jetson TK1 to an Arduino board which outputs the temperature to an LCD display... Let's see how this is done...

OK, so this is the plan: every time the temperature of my Jetson TK1 GPU reaches 60C or so, a siren starts howling, a blinding red light is blinking in the dark underground lab and it reflects on screens that output incomprehensible sequences of numbers and data, a robotic voice goes "evacuate the building," "this is an emergency" and other catchy phrases etc, etc..., but first I need to hook up Jetson TK1 to an Arduino board which outputs the temperature to an LCD display... Let's see how this is done...

Subscribe to:

Posts (Atom)